The Internet of Things

So here I am seeing issues, reading about issues and trying to stop issues in the Internet of Things… Everyday someone seems to be publishing articles on the issues, people are getting more aware (you’d think!) but there seems to be no real movement.

Some of my readers will know what I do for my day job, for those that don’t I wrote the SORBS Anti-spam system.. not quite the most hated, but some who should know better have said they just want me dead, then SORBS dead, then me killed again just to be sure I’m actually dead. Several years ago I spent Christmas sitting in front of my computers rewriting part of the system, particularly that part that finds “bad stuff” and reports it (eg Open-Relay Servers) and whilst scanning hosts that were actively trying to send spam and/or viruses to me I came across the web page of a fridge. The page half loaded before it became completely unresponsive and tracing it I found it on an IP address that appeared to be in Rome (Italy)…. When I reported my finding of a ‘Fridge Spamming’ to my boss all hell broke loose, blog articles were written, front pages were held and suddenly the world knew about ‘Fridges Spamming‘. Shortly there after we got debunked by our main competitor of the time who asserted it wasn’t possible, the article however sparked off massive research and watching of the technology from a security stance.

In July of the same year a bunch of researchers at a University found that the premise of the ‘debunking’ was actually false and that with a specific sequence of commands it was possible to get the fridge concerned into a system ‘admin/debug’ mode that allowed a remote attacker to use the device as a simple proxy server and install other “apps”. This largely went unnoticed in IoT industry with respect to the original report, I never understood why… perhaps someone can explain that to me? 🙂

3 years later…

One would think we have learned something, we certainly have seen more of these types of attacks, not always for spam but just as a device to get into a network, to provide the door way. Indeed the attackers have pretty much made an art out of it, using combinations of direct hacks, social engineering to gain access or persuade users to install things and even stealing devices… The lists and lengths seems endless, especially when you consider who is doing this sort of thing and even who is paying who… We’ve all heard about Trump and Russia and the controversy, well there are teams of hackers in Russia who’s sole income is to break into systems and steal secrets. Its not a stretch to imagine that they are not unconnected… Personally I don’t go into the conspiracy theories but I can tell you there are companies and persons of interest that do pay for services of such teams and not just Russian ones, there are European teams, Chinese teams and American etc..

The result is a lot more tech out there, all with security issues and all trying to keep market share, by innovating or by destroying the competition.

So why are we helping these people along? Why are we allowing companies to circumvent privacy laws? Why are they even trying? Why are there more and more companies dealing with security remediation rather than companies dealing with the actual problem…?

All questions for you the reader (and hopefully some people that can effect change.)

So what is this blog post about? Why did you write it?

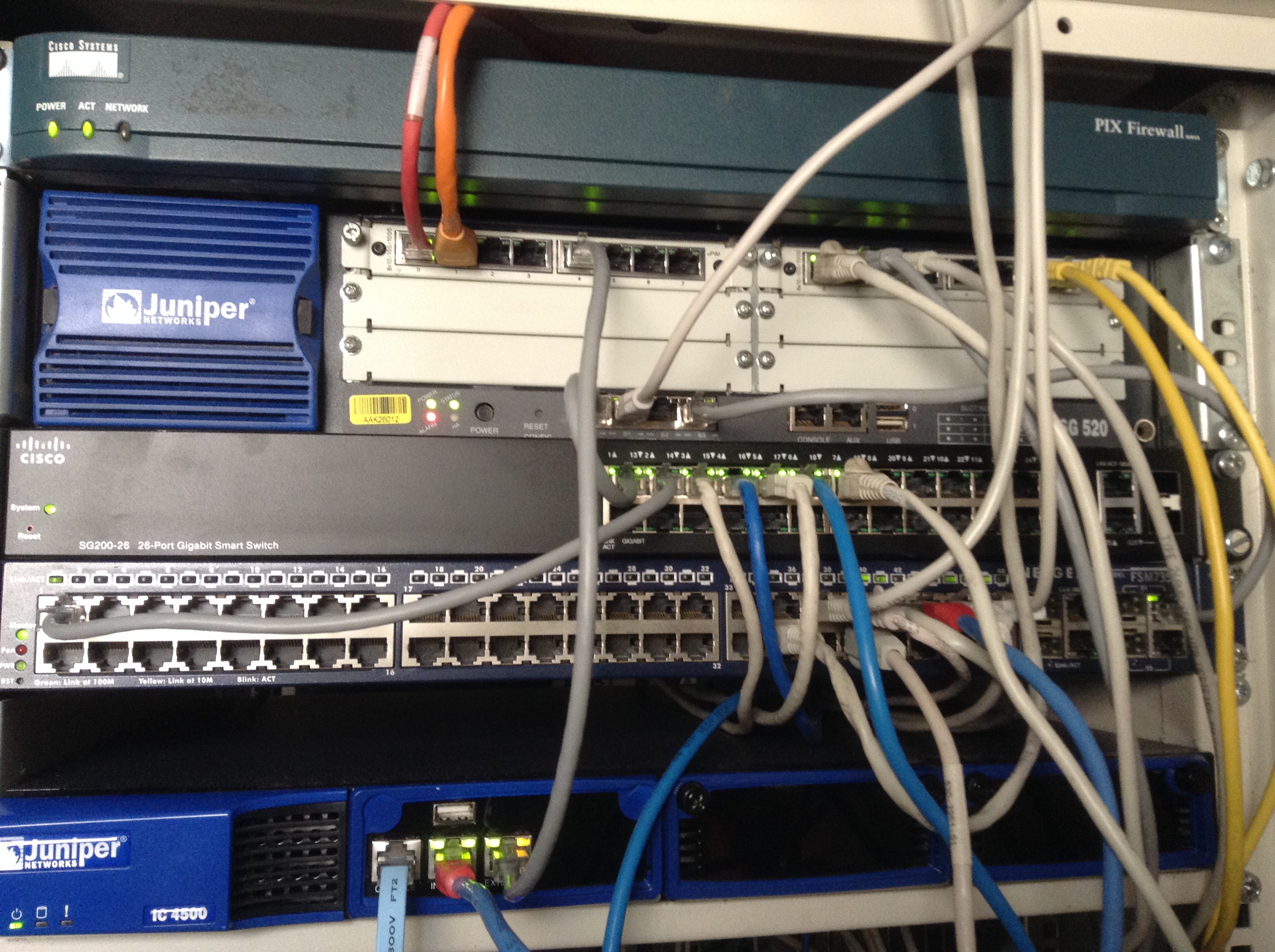

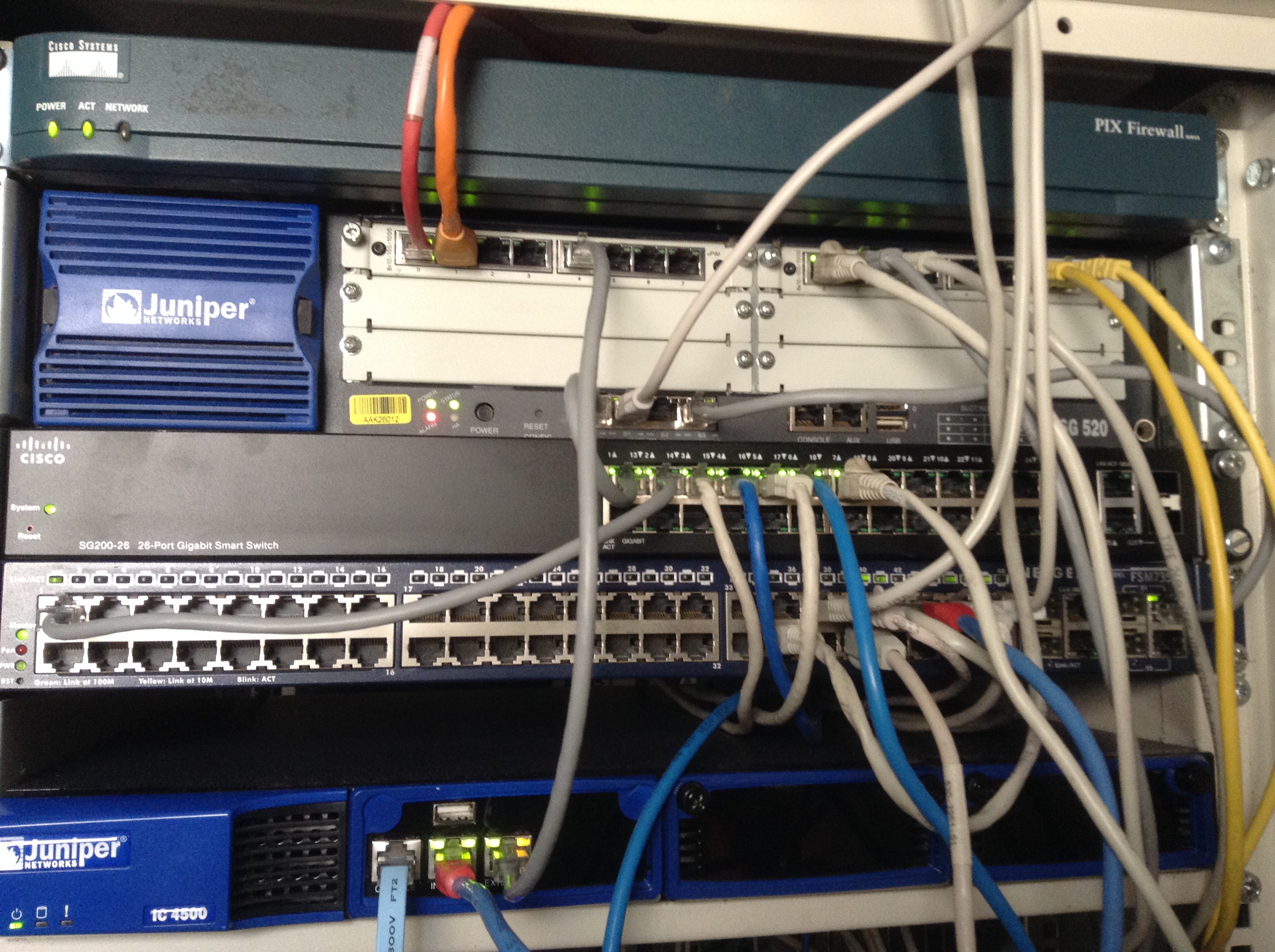

Well quite simply I chase down security patches for my services… You see I still manage SORBS and recently we moved some of the servers around to a new Datacenter and as a consequence I changed a lot of security settings to make the systems more secure. The fall out of this was I completely re-wired my home office network and the only thing on my network now that is not ‘secured’ (ie may have issues) was my wireless network.

Originally I had an OpenVPN connection for every service over the wireless that was an ‘authorised machine’ and a straight session login for controlling access. I deliberately set the whole network to ‘Open’ (ie unencrypted) to remind people using it that everything can be watched so if it’s important, use HTTPS (or use the OpenVPN) etc.

I decided to switch the network to WPA2-Enterprise for authorised users, and to use a Juniper NAC to provide a captive portal and control the logins etc… I didn’t account for the ridiculous cost of the licenses of the Juniper NAC so even though I picked up a brand new IC4500 for less than €70 I couldn’t use it because the most basic license (to allow 25 devices to login) is over €1200 and using the Captive Portal aspect (which is what I actually wanted) it was going to cost over €4500… I pulled it apart… I found that the IC4500 is just a Dual Core, 1-RU server with a couple of gigs of RAM, an 80G hard drive and 2 Gigabit Ethernet ports… so changing the drive to something larger and a bit of fiddling and I put the OS I have been developing on it (BSD Server UNIX -BSDSUX for short) and now I have a captive portal of my own making… so last thing was to get the Access Points able to do both Open Security and WPA2-Enterprise at the same time, and when logged in get forced off the open wireless and allowed onto the secure wireless.

I decided to switch the network to WPA2-Enterprise for authorised users, and to use a Juniper NAC to provide a captive portal and control the logins etc… I didn’t account for the ridiculous cost of the licenses of the Juniper NAC so even though I picked up a brand new IC4500 for less than €70 I couldn’t use it because the most basic license (to allow 25 devices to login) is over €1200 and using the Captive Portal aspect (which is what I actually wanted) it was going to cost over €4500… I pulled it apart… I found that the IC4500 is just a Dual Core, 1-RU server with a couple of gigs of RAM, an 80G hard drive and 2 Gigabit Ethernet ports… so changing the drive to something larger and a bit of fiddling and I put the OS I have been developing on it (BSD Server UNIX -BSDSUX for short) and now I have a captive portal of my own making… so last thing was to get the Access Points able to do both Open Security and WPA2-Enterprise at the same time, and when logged in get forced off the open wireless and allowed onto the secure wireless.

So finally to the point…

The Internet of Security Issues

Not so long ago a number of security vulnerabilities were hitting the headlines, and in particular ‘ShellShock’ so running Amped Wireless AP20000G‘s around my home which I happen to know run Linux I was a little concerned. I had the latest firmware on the devices and this was dated few years earlier (13 Dec 2012) so I emailed Amped Wireless about the issue and wasn’t actually told anything about the issue except they’d review the bug. Time went by and more and more issues came up, and still no firmware… the latest one is CVE-2017-6074 which was introduced to the Linux Kernel way back in 2006, in fact the vulnerability description states this:

The oldest version that was checked is 2.6.18 (Sep 2006), which is

vulnerable. However, the bug was introduced before that, probably in the first release with DCCP support (2.6.14, Oct 2005).

Now the clueful of you would know that this is a local privilege escalation issue and when it comes to routers, APs etc you’d actually have to get on the device to exploit it. The same clueful will know that’s not as difficult as it might sound.

So figuring that I’m never going to get the firmware update I need/want I might as well go about hacking the router myself and building my own firmware that can indeed work with the IC4500 and finally finish securing my network to the level I want.

(and for those fed up with reading… if you haven’t worked it out… it’s 2017, the Access Point is classed as one of the ‘Internet of Things’ it is vulnerable to hacking on multiple fronts and 5 years later and I can’t get an update to the firmware – even though they are still selling these devices in shops!!!! … the gory horror for the techs is coming, so keep reading if you want…)

First things first when going down this path… Research the hardware and see what’s available… the Website ‘WikiDevi‘ is great for this and provides the following details…

CPU1: Realtek RTL8198 (620 MHz)

FLA1: 8 MiB (Macronix MX25L6406EM2I-12G)

RAM1: 64 MiB (Hynix H5PS5162GFR-S6C)

WI1 chip1: Realtek RTL8192DR

WI1 802dot11 protocols: an

WI1 MIMO config: 2×2:2

WI1 antenna connector: RP-SMA

WI2 chip1: Realtek RTL8192CE

WI2 802dot11 protocols: bgn

WI2 MIMO config: 2×2:2

WI2 antenna connector: RP-SMA

ETH chip1: Realtek RTL8198

Switch: Realtek RTL8198

LAN speed: 10/100/1000

LAN ports: 4

WAN speed: 10/100/1000

WAN ports: 1

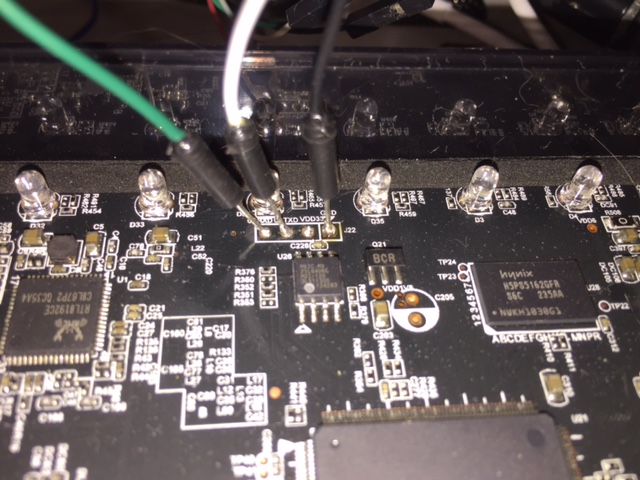

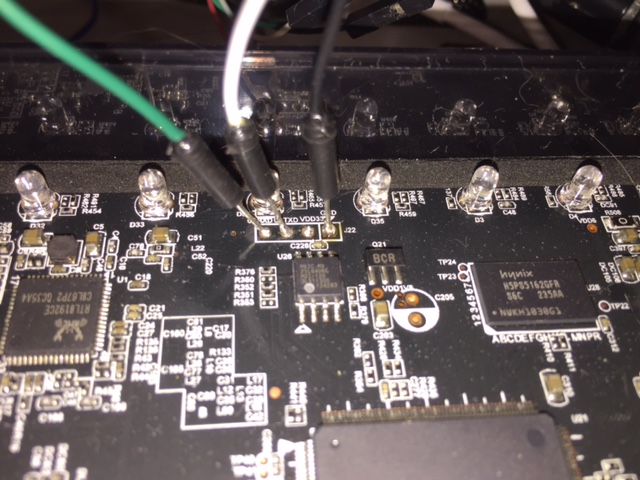

Which also tells me that normal OpenWRT support is not available (they don’t support RealTek devices mostly).. but more looking (and the WikiDevi page now says it) there is RealTek support by some authors. Looking up the chips I also get information there is JTAG support (which is basically a serial port for debugging) so I got to work with my screwdriver and soldering iron and this was the result…

looking (and the WikiDevi page now says it) there is RealTek support by some authors. Looking up the chips I also get information there is JTAG support (which is basically a serial port for debugging) so I got to work with my screwdriver and soldering iron and this was the result…

Which applying power produced the following in a minicom session.

Booting...?

========== SPI =============

SDRAM CLOCK:181MHZ

------------------------- Force into Single IO Mode ------------------------

|No chipID Sft chipSize blkSize secSize pageSize sdCk opCk chipName |

| 0 c22017h 0h 800000h 10000h 1000h 100h 86 30 MX6405D/05E/45E|

----------------------------------------------------------------------------

Reboot Result from Watchdog Timeout!

---RealTek(RTL8198)at 2012.04.12-16:11+0800 version v1.2 [16bit](620MHz)

no sys signature at 00010000!

no sys signature at 00020000!

no sys signature at 00030000!

no sys signature at 00140000!

no rootfs signature at 000E0000!

no rootfs signature at 000F0000!

no rootfs signature at 00130000!

no rootfs signature at 00240000!

Jump to image start=0x80500000...

decompressing kernel:

Uncompressing Linux... done, booting the kernel.

done decompressing kernel.

start address: 0x80003640

RTL8192C/RTL8188C driver version 1.6 (2011-07-18)

Probing RTL8186 10/100 NIC-kenel stack size order[3]...

chip name: 8196C, chip revid: 0

NOT YET

eth0 added. vid=9 Member port 0x1...

eth1 added. vid=8 Member port 0x10...

eth2 added. vid=9 Member port 0x2...

eth3 added. vid=9 Member port 0x4...

eth4 added. vid=9 Member port 0x8...

[peth0] added, mapping to [eth1]...

init started: BusyBox v1.13.4 (2012-12-13 11:08:29 CST)

Init Start...

Init bridge interface...

killall: smbd: no process killed

killall: nmbd: no process killed

basename(1)

basename(2 /sys/block/sda)

basename(2 /block/sda)

basename(2 /sda)

basename(3 sda)

basename(1)

basename(2 /sys/block/sda)

basename(2 /block/sda)

basename(2 /sda)

basename(3 sda)

basename(1)

basename(2 /sys/block/sda/sda1)

basename(2 /block/sda/sda1)

basename(2 /sda/sda1)

basename(2 /sda1)

basename(3 sda1)

basename(1)

basename(2 /sys/block/sda/sda1)

basename(2 /block/sda/sda1)

basename(2 /sda/sda1)

basename(2 /sda1)

basename(3 sda1)

try_mount(1) sda1, /var/tmp/usb/sda1

CMD: /bin/ntfs-3g /dev/sda1 /var/tmp/usb/sda1 -o force

Error opening '/dev/sda1': No such device or address

Failed to mount '/dev/sda1': No such device or address

Either the device is missing or it's powered down, or you have

SoftRAID hardware and must use an activated, different device under

/dev/mapper/, (e.g. /dev/mapper/nvidia_eahaabcc1) to mount NTFS.

Please see the 'dmraid' documentation for help.

Init Wlan application...

WiFi Simple Config v2.3 (2011.11.08-13:04+0000).

Register to wlan0

Register to wlan1

route: SIOCDELRT: No such process

iwcontrol RegisterPID to (wlan0)

iwcontrol RegisterPID to (wlan1)

$$$ eth1 & eth0 up $$$

IEEE 802.11f (IAPP) using interface br0 (v1.7)

#

As one can see straight in at a root prompt (no login – but hey, needs to physically connect to it with a soldering iron…), and we can see it’s running BusyBox (which means it’s running ash not bash so not vulnerable to Shellshock – nice of the company to tell me!??!?!)… But confirmed….

# x='() { :;}; echo VULNERABLE' ash -c :

#

So what about the latest bug that goes back to 2006… well…

# cat /proc/version

Linux version 2.6.30.9 (kevinlin@localhost.localdomain) (gcc version 3.4.6-1.3.6) #603 Thu Dec 13 15:14:20 CST 2012

That would be a yes then… In fact we can see that this OS was made with the old version of the RealTek SDK…

# cat /etc/version

RTL8198 v1.0 -- Thu Dec 13 15:13:43 CST 2012

The SDK version is: Realtek SDK v2.5-r7984

Ethernet driver version is: 7953-7929

Wireless driver version is: 7977-7977

Fastpath source version is: 7873-6572

Feature support version is: 7927-7480

So my next trick is to work out which GPIO pins I need to manipulate to get the power output control of the Skyworks (SiGe) SE5004L / 5004L power amplifiers under my control but that’s digressing from the topic of this post. Poking around looking for the details and I found something else rather interesting…

# ps -ax

PID USER VSZ STAT COMMAND

1 root 1576 S init

2 root 0 SW< [kthreadd]

3 root 0 SW< [ksoftirqd/0]

4 root 0 SW< [events/0]

5 root 0 SW< [khelper]

8 root 0 SW< [async/mgr]

61 root 0 SW< [kblockd/0]

71 root 0 SW< [khubd]

88 root 0 SW [pdflush]

89 root 0 SW< [kswapd0]

649 root 0 SW< [mtdblockd]

870 root 13760 S /bin/smbd -D -s /var/smb.conf

878 root 13808 S /bin/smbd -D -s /var/smb.conf

882 root 6508 S /bin/nmbd -D -s /var/smb.conf

902 root 960 S iapp br0 wlan0 wlan1

913 root 1260 S wscd -start -c /var/wsc-wlan1.conf -w wlan1 -fi /var/

917 root 984 S iwcontrol wlan0 wlan1

942 root 1008 S dnrd --cache=off -s 168.95.1.1

951 root 956 S reload -k /var/wlsch.conf

984 root 2168 S webs

985 root 1584 S -/bin/sh

1021 root 1576 R ps -ax

#

.. That little thing that says, “dnrd –cache=off -s 168.95.1.1” .. What this program is is a DNS relay server ie something to help you resolve addresses from the names we know and are used to like “www.microsoft.com” into the quad octet that the computers can deal with called an ‘IP Address’. Now the reason I’m pointing it out is that 168.95.1.1 is not something I have configured and it is not something on my network, so it tweaked my curiosity. Turns out it belongs to a Taiwanese company “Chunghwa Telecom Co., Ltd”

$ host 168.95.1.1

1.1.95.168.in-addr.arpa domain name pointer dns.hinet.net.

$ whois hinet.net

.

.

.

Server Name: HINET.NET.TW

Registrar: MELBOURNE IT, LTD. D/B/A INTERNET NAMES WORLDWIDE

Whois Server: whois.melbourneit.com

Referral URL: http://www.melbourneit.com.au

Domain Name: HINET.NET

Registrar: NETWORK SOLUTIONS, LLC.

Sponsoring Registrar IANA ID: 2

Whois Server: whois.networksolutions.com

Referral URL: http://networksolutions.com

Name Server: ANS1.HINET.NET

Name Server: ANS2.HINET.NET

Status: ok https://icann.org/epp#ok

Updated Date: 02-feb-2017

Creation Date: 19-mar-1994

Expiration Date: 20-mar-2018

.

.

.

Domain Name: HINET.NET

Registry Domain ID: 2854475_DOMAIN_NET-VRSN

Registrar WHOIS Server: whois.networksolutions.com

Registrar URL: http://networksolutions.com

Updated Date: 2017-03-05T15:11:26Z

Creation Date: 1994-03-19T05:00:00Z

Registrar Registration Expiration Date: 2018-03-20T04:00:00Z

Registrar: NETWORK SOLUTIONS, LLC.

Registrar IANA ID: 2

Registrar Abuse Contact Email: abuse@web.com

Registrar Abuse Contact Phone: +1.8003337680

Reseller:

Domain Status: ok https://icann.org/epp#ok

Registry Registrant ID:

Registrant Name: Internet Dept., DCBG, Chunghwa Telecom Co., Ltd.

Registrant Organization: Internet Dept., DCBG, Chunghwa Telecom Co., Ltd.

Registrant Street: Data-Bldg, No. 21 Sec.1, Hsin-Yi Rd.

Registrant City: Taipei

Registrant State/Province: Taiwan

Registrant Postal Code: 100

Registrant Country: TW

Registrant Phone: +886.223444720

Registrant Phone Ext:

Registrant Fax: +886.223960399

Registrant Fax Ext:

Registrant Email: vnsadm@hinet.net

Registry Admin ID:

Admin Name: Internet Dept., DCBG, Chunghwa Telecom Co., Ltd.

Admin Organization: Internet Dept., DCBG, Chunghwa Telecom Co., Ltd.

Admin Street: Data-Bldg, No. 21 Sec.1, Hsin-Yi Rd.

Admin City: Taipei

Admin State/Province: Taiwan

Admin Postal Code: 100

Admin Country: TW

Admin Phone: +886.223444720

Admin Phone Ext:

Admin Fax: +886.223960399

Admin Fax Ext:

Admin Email: vnsadm@hinet.net

So the not only is this Access Point vulnerable to hacking it’s also sending details of every site I’m going to back to a server in Taiwan… Well not quite, because unlike most home users I am using my own DNS servers and have specifically blocked the access points from talking to the Internet… I am not your average home user though. That leads me to the following conclusion that some will find scary…

The Conclusion…

The biggest current threat to our networks, our privacy, and our electronic identities (including funds) is the threat of the Internet of Things that have not been patched.

This threat is massive as the clueful people out there often can’t patch because the companies selling the devices are not providing security fixes because their profit is about getting new devices out there, not fixing old devices.

It’s even bigger because most of the world are not techs, they don’t even know how to update the firmware or where it would even be available if they did.

…Yet we’re all connecting up to the Internet, we’re all buying these boxes from household temperature controls available on your phone to Smart TVs and Fridges… even ‘Smart Bulbs‘!

All of which have the ability to run code, all of which have potential security issues, and all of which can provide the unethical people out there, ‘doorways into you home’.